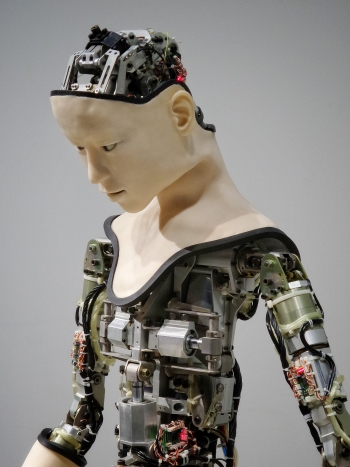

Is Artificial Intelligence the Future of Mental Health Treatment?

What if you could interact with a therapist, learn new skills to improve your well-being and gain access to information that would normally be learned in therapy for a fraction of the cost, or for free, without leaving your home? The prospect is certainly alluring, and with Artificial Intelligence (AI) technology, there is reason to take this prospect seriously.

AI technology for mental health was first developed in the 1960s with ELIZA, a simple computer program. AI has since been developed for use in other areas, including assisting therapists with diagnosing depression and PTSD in the US armed forces. Recently, the integration of AI with smart phone technology had opened up opportunities to provide mental health support that is not possible with traditional in-person therapy.

An AI Chatbot is an interactive program hosting a two-way text or voice conversation that can deliver structured therapies such as Cognitive Behavioral Therapy or journaling therapy. AI systems such as WoeBot or Tess teach therapeutic skills that people can apply on their own, similar to the skills therapists would teach their patients. These Chatbots can text or email users daily reminders to complete exercises such as journaling, mood check-in diaries, mindfulness meditation and thought re-assessment.

Gale Lucas, a psychology researcher at the University of Southern California who studies human-computer interactions, spoke with the Trauma and Mental Health Report (TMHR) about how AI Chatbots can bridge gaps that exist in accessing therapy:

“There is a shortage of human therapists and they’re not available 24/7. They’re also not available in remote places, and people are always hesitant to seek mental health treatment because of stigma. For these reasons, there are benefits to using AI because they’re always available no matter where you are, and there is no stigma associated with them. We are showing that people are more willing to open up to an AI than they would with just an online form at a self-help website which is more like a textbook. Having an actual conversation puts you in a different mode to really encourage sharing, openness and self-reflection.”

Beyond providing therapy, AI technology has the potential to support mental health and wellbeing more broadly. AskAri, an AI Chatbot developed by Albert “Skip” Rizzo at the University of Southern California, teaches students self-care skills and offers mental health support and information to help them engage in campus life. In an interview with the TMHR, Rizzo explained how AskAri interacts with users:

“A student may pop up on the system and say: ‘I’m really lonely. I just moved here from Korea, and I don’t have any friends. I’ve always lived with my family. I miss them.’ The AI character might go into a mini soliloquy about loneliness and its impact but suggest a Korean social group that exists and meets every week at this location. AskAri provides a combination of psychoeducation, but also sort of a concierge providing information that might be helpful. It was first developed as an interactive Chatbot to provide some support for students waiting to see a counsellor but ended up growing in content so that it really went beyond filling in the gap for students waiting to see a live provider, and more towards something that anybody could get some benefit from.”

Despite these benefits, AI Chatbots are unlikely to completely replace in-person therapy. Lucas emphasizes that shallow interactions are characteristic of AI therapy systems. While this “social snacking” temporarily fulfills an individual’s need for social contact, it cannot be a permanent solution to the in-born human need for social bonding or a substitute for the deep connection between a therapist and their client.

Concerns have also been raised including the need for safety of users in distress, privacy issues of data protection, the risk of psychological transference during human-computer interactions, and the long-term effects of interactions with AI. Alena Buyx, a specialist in Ethics in Medicine and Health Technology at the Technical University in Munich shares her concerns with the TMHR:

“One of the things that is lacking is a clear plan on how we can implement this technology into the clinic responsibly. How these tools should be used, should therapists be trained on it, how to deal with patients that will increasingly use this outside of traditional therapy? All these kinds of questions need to be addressed: the responsibility, the accountability, the data, ethical implications, we need to find a balance to do this well. If we just let this out in the world as it is now, without any consideration, that would be a problem.”

It is important that individuals, therapists, and researchers alike take into consideration the benefits and drawbacks of using AI technology. While the potential for AI Chatbots to assist those in need of mental health support sparks curiosity, skepticism remains high, and more investigation need to be done.

-Lotus Huyen Vu, Contributing Writer

Image Credits:

Feature: H Heyerlein at Unsplash, Creative Commons

First: Andy Kelly at Unsplash, Creative Commons

Second: Franck V. at Unsplash, Creative Commons